Abstract

Large language models (LLMs) are being increasingly deployed as part of pipelines that repeatedly process or generate data of some sort. However, a common barrier to deployment are the frequent and often unpredictable errors that plague LLMs. Acknowledging the inevitability of these errors, we propose data quality assertions to identify when LLMs may be making mistakes. We present SPADE, a method for automatically synthesizing data quality assertions that identify bad LLM outputs. We make the observation that developers often identify data quality issues during prototyping prior to deployment, and attempt to address them by adding instructions to the LLM prompt over time. SPADE therefore analyzes histories of prompt versions over time to create candidate assertion functions and then selects a minimal set that fulfills both coverage and accuracy requirements. In testing across nine different real-world LLM pipelines, SPADE efficiently reduces the number of assertions by 14% and decreases false failures by 21% when compared to simpler baselines. SPADE has been deployed as an offering within LangSmith, LangChain’s LLM pipeline hub, and has been used to generate data quality assertions for over 2000 pipelines across a spectrum of industries.

Key Insights

Discovering assertions from prompt histories

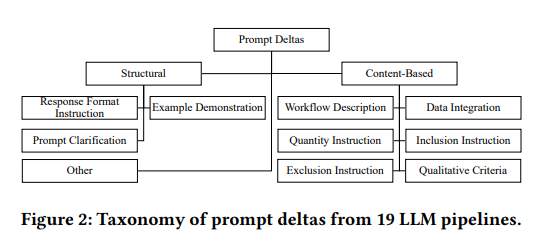

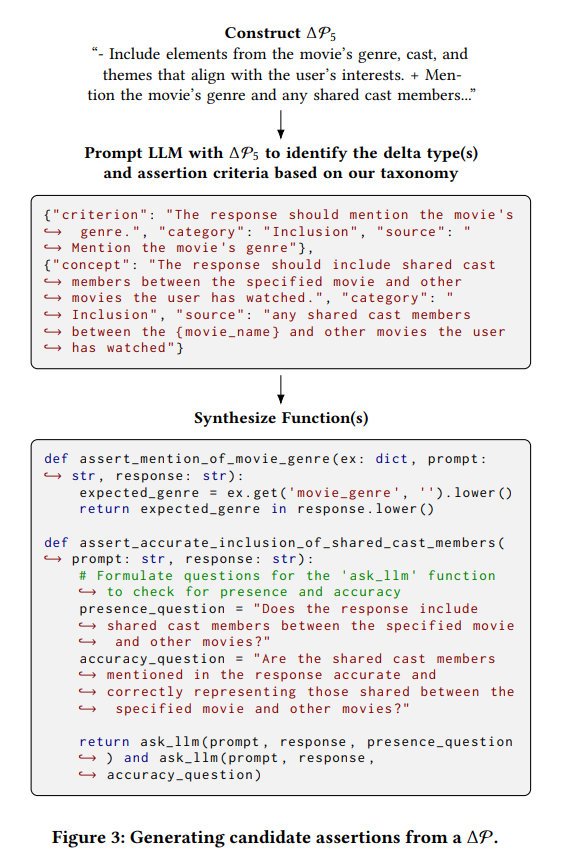

This paper first introduces the observation that prompt histories can be used for generating candidate assertions. Specifically, the differences in prompt versions (or prompt deltas) over commits in prompt pipelines represent qualitative ideals that a developer hopes to embody within the LLM outputs.

The authors first categorize each prompt delta based on a proposed taxonomy, then generate assertion functions to validate that LLM outputs embody the desired quality in the prompt delta.

Subsumption graph for candidate assertion filtering

The authors first introduced two simpler ideas but were met with challenges:

-

Baseline: thresholding on failure coverage (recall) and false failure rate (false positive rate), but realized that FFR can easily add up and we need to consider interactions between assertions

-

Optimization: ILP to select a minimal set of assertions, subject to constraints on failure coverage and false failure rate, but the solution is very specific to user-provided grades (which may not encompass all failure modes)

Subsumption graphs convey the idea of the implication. If assertion X checks qualities A and B, assertion Y checks for qualities A, passing assertion X implies passing assertion Y, indicating some sense of redundancy.

The final approach incorporates the subsumption graph into the ILP, such that the objective is penalized if non-subsumed (non-redundant) assertions are not included in the solution set.

Weaknesses

I would say that the most glaring deficiency might be the assumption that all assertions as equally weighted. In this paper, an LLM generation is deemed successful if it passes all assertions. Depending on how the developer implements blocking or retrying strategies, having a sizable number of stylistic or non-critical assertions flagging “less than desirable but passable generations” in production may result in undesirable handling rates for customer-facing applications. The relative criticality of assertions may be factored in during the assertion selection phase.

Secondly, the incremental impact of introducing the taxonomy in the assertion generation workflow seems unclear, based on my interpretation of Figure 3. I would be interested in an ablation, but based on my intuition, I believe there is a high chance that high-quality assertion functions can still be generated without the extra category information. This is further supported by the authors’ observation that the majority of assertions are of inclusion and exclusion criteria.

Takeaways / Thoughts

I have some thoughts that perhaps go against what is proposed in the paper, but I’d still like to discuss them nonetheless.

Assertions are unit tests for LLMs

We can treat LLM assertions like how we write unit tests for code. Each piece of logic should tested with positive and negative test cases. The issue is how might we generate a bunch of test cases that are highly accurate and with good coverage.

A popular programming paradigm is test-driven development, where a developer writes failing tests first, and then gradually iterates on the code to pass these tests. I wonder if we can take a similar approach with regards to LLM output evaluation – the developer first specifies:

-

Non-negotiable qualities that should be fulfilled (e.g., the generation should not contain toxic phrases).

-

Stylistic preferences that would be great to be embodied in the output but not the end of the world if it doesn’t (e.g., verbosity).

The prompt starts from an empty string and is gradually built up to meet the test cases. This can probably be achieved by tools like Promptimize or DSPy. We can then further implement more sophisticated concepts like red-teaming – getting another LLM to generate challenging test cases to probe the boundaries of these prompts and assertions.

Assertions should not be coupled with prompts

Suggesting assertions from prompt deltas is a “bottom-up” approach that implicitly couples assertions to the prompts. However, prompts tend to vary across model providers and even model versions. I’m more in favor of a “top-down” approach or thinking from first principles: defining what the output needs to look like and keeping prompts flexible as long as they fulfill the desired qualities. We may swap out specific LLMs and their accompanying prompts at any time, but these assertions should remain fixed unless the set of desired qualities changes.

Subsumed assertions are not necessarily redundant

In my experience developing assertions for online LLM output evaluation, I see the benefit of giving these checks “multiple fields of view”. For example, let’s say I have a RAG pipeline that generates sales emails for prospects. I have assertions that check for quality on the paragraph or sentence level, like having typical sales email components: a hook, value proposition, and call-to-action. I can also have assertions on the email level, like the notion that this email should carry a persuasive tone or sound coherent.

One might point out that the assertion for email structure fulfills the persuasive tone assertion (since this structure is arguably meant for persuasive writing). The key difference is that the email structure assertion checks for “how” you arrive at a persuasive email but the persuasive tone assertion checks for whether the overall email is ultimately perceived as persuasive, regardless of whether it has a hook, a value proposition, and a CTA. Similarly, it can also fulfill the structure without being persuasive.

This is also consistent with the earlier point that assertions should not be coupled with prompts; my hunch is that the key difference between “principles” and “prompts” is just a matter of level of perspective.

Endowing an evaluation suite with multiple fields of view allows the outputs to be checked more comprehensively, even if subsumption exists. In other words, we can strike a balance between checking processes versus checking outcomes.

Alternative ideas for mitigating redundancy

One idea to solve the redundancy problem is to use retrieval systems based on embeddings. Generate vector embeddings of candidate assertion functions and store them in a vector database. Before adding each one to the vector database, retrieve top

Not all assertions should be weighted equally

In previous sections, I discussed the idea of creating assertions based on top-down principles and giving the validation suite with multiple fields of view. This alludes to the fact that not all assertions should be treated equally. The more critical issue in LLM evaluation systems is what do you do with failed generations? The blocking strategy key when implementing these assertions in production – which failed assertions should block the normal LLM workflow?

When designing blocking strategies, it is often a good idea to categorize assertions into one of two types mentioned above: non-negotiable qualities versus stylistic preferences, echoing my thoughts about mildly low-quality versus catastrophic generations in my earlier blog post. A violation of a non-negotiable quality is often a catastrophic one that we’d like to avoid at all costs and is usually also one that is rather easily discernible when judging the final output as a whole. But a violation of a stylistic preference (e.g., specifying you want <100 words but the output gives you 110 words) may not be worth blocking the pipeline for if the overall output is still acceptable.

It is still worth implementing these stylistic checks, and perhaps we may still wish to regenerate the output with this feedback (à la Reflexion), but ultimately we can still allow the output to be used downstream. We can also log them to give a sense of how our prompts fare with these outputs so we may find ways to optimize them further while affording ourselves flexibility in the meantime.